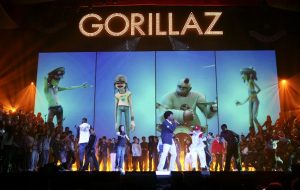

This modern evolution of the sound system is being offered by a host of companies including L-Acoustics, DNB, Flux, Meyer Sound and more. L-Acoustics’ L-ISA Immersive Hyperreal Sound technology created the 360-degree soundscape for Bon Iver’s 2018 North American tour that was telling in its impact because audience members were transfixed by the band on stage rather than being distracted by their phones. What this technology does is transform a large venue into an intimate listening experience. Audience reviews of shows that have used this technology call it life-changing. The addition of an ultimate visual to a performance already stacked with lushly calibrated video and lighting connected the sight of a musical performance with its sound. The bridge between these two senses is enabled by an unusual array of speakers that hangs above the stage. This was a site-specific implementation of “Hyperreal Sound” by L-ISA from L-Acoustics, which combines art and science to overcome historic stereo adversity and deliver spatialized audio to a wide majority (90%) of the audience.

How It Is Done

The immersive sound system utilises audio inputs (typically direct outs) from a conventional mix console and routes them to a hardware specialised processor that, under a controller Mac/Win software control, provides a single-screen visualization of all sound objects in the 3D mixing space, with easy access to spatial parameters, such as pan, width, distance and elevation for each sound object.

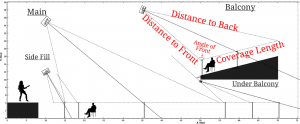

The playback side uses five arrays over the stage (with or without subwoofers) and optional “extension” speakers, which can widen the coverage to the side walls. This comprises a “frontal” system, although further options can also include overhead or surround speakers. Capable of operating in real-time, technology such as L-Acoustics’ Soundvision acoustical and mechanical simulation program calculates SPL coverage, SPL mapping and delay coverage for complex sound system/venue stereo or multichannel configurations.

With more than 100 inputs going into the mix even when there’s just a five-piece version of the band on stage, the technology’s object-based audio capabilities allow for a spacious separation and distinction between sonic elements. An audio object is assigned a location within the 3D image of a mix, and then, using spatial processing, FOH (front of house) engineers can select from a variety of sensory shape-shifting options.

The spatial thought process in mixing is a fascinating one —things can be made to sound close, or far away. Width can be added, so the vocals are big, while perhaps a rhythm guitar can be tucked into the back of a mix. Details of sounds can be picked up by audience members no matter where they are standing at the venue. The soundscape this creates is panoramic and natural — much like one expects to hear things in real-life.

All this invisible arrangement of sound somehow intensifies the visual element of what the audience is seeing on stage. What this immersive sound technology is attempting to do is re-harmonize and reconnect the visual and auditory senses. When audience members are able to better perceive where the sound is coming from on stage, it creates a real distinction between instruments and voices, localizing each to their space within a performance and most importantly, this makes the show feel more intimate.

Immersive sound technology has benefits that go beyond spatiality and placement considerations. In addition to increased clarity, show levels offered a few surprises in terms of perceived loudness. Engineers reported that they were mostly running 102 dB to about 105 or 107 dB. The sound feels much louder at a lower level.

The Future of Augmented Reality Awaits

While the technological advances in lights and video have been tremendous, with audio it has mostly been limited to left/right hangs. But now, speakers can be moved farther out to accommodate larger video walls, and fans can be wowed with the musical experience itself.

The logistics of using this technology is far more complex, with more consideration and coordination required between teams including video and lighting. It also calls for live sound engineers and technicians to have a thorough understanding of the ins and outs of the processors and the workings of the technology to use it skillfully. There is more equipment involved than usual and the planning and production have to keep up with this complexity. Besides concerts, the immersive sound technology has already found use at places of worship, orchestras and alternative sound events such as sound baths.

The logistics of using this technology is far more complex, with more consideration and coordination required between teams including video and lighting. It also calls for live sound engineers and technicians to have a thorough understanding of the ins and outs of the processors and the workings of the technology to use it skillfully. There is more equipment involved than usual and the planning and production have to keep up with this complexity. Besides concerts, the immersive sound technology has already found use at places of worship, orchestras and alternative sound events such as sound baths.

The team at Audio Academy has been keeping abreast of the latest developments and look forward to including immersive sound in the live sound training program at Audio Academy once the time is right.